Understanding the Importance of Big-O Notation in Coding Interviews

In this lesson, we will introduce the concept of big-o notation, a mathematical tool used to measure algorithm efficiency.

Table of Contents

Introduction

Big O notation is a fundamental concept in computer science that measures the efficiency of algorithms. It provides a standardized way to describe the algorithm's scalability and time complexity as the input size increases.

Big O notation expresses the upper bound or worst-case scenario of an algorithm's performance, allowing developers to analyze and compare different algorithms based on their efficiency.

The notation is represented as O(f(n)), where f(n) represents the growth rate of the algorithm in terms of the input size n. Understanding Big O notation is crucial for designing and optimizing algorithms, ensuring efficient utilization of computational resources.

Understanding algorithm efficiency

An algorithm efficiency is calculated on two factors:

- Time complexity

- Space complexity

Time and space complexity are fundamental concepts in algorithm analysis that help measure the efficiency of an algorithm.

Time complexity refers to the amount of time required for an algorithm to run, while space complexity refers to the amount of memory or space required by an algorithm to execute.

Big-O notation is commonly used to express both time and space complexity. It provides a standardized way to describe the upper bound or worst-case scenario of an algorithm's performance as the input size increases.

By understanding and expressing time and space complexity using Big-O notation, programmers can analyze and compare algorithms based on their efficiency and resource requirements.

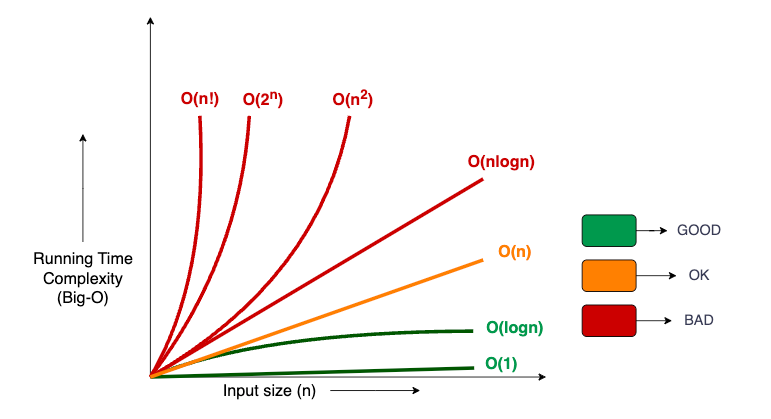

Sketch: Big-O complexities

Following is the graph drawn based on the running time complexity against an input size n. Almost all of the algorithms fall under these complexities.

Any algorithm that falls:

- Under

O(1)orO(logN)is green, which is good. - Under

O(N)is orange, which is ok, but - Any algorithm that does not fall under the above two categories is bad.

Why is Big-O important in coding interviews?

In coding interviews, Big-O notation plays a crucial role in assessing a programmer's competence and problem-solving skills. It allows interviewers to gauge a candidate's understanding of algorithmic efficiency and scalability.

Interviewers can evaluate how well a candidate can predict and address performance issues by analysing algorithms' time and space complexity using Big-O notation.

Moreover, Big-O notation enables comparing and contrasting different algorithms, helping interviewers assess a candidate's ability to make informed choices based on performance analysis. Demonstrating proficiency in Big-O notation showcases a candidate's capability to optimize code and design efficient solutions, making it an essential skill for coding interviews.

Algorithm scalability

Every programmer should have a good understanding of Big-O notation. The scalability of an algorithm is determined by this notation, which establishes a limit on the number of operations it can perform based on the required data to produce results.

A solid understanding of Big O notation is vital for every programmer as it determines an algorithm's scalability. This notation sets a boundary on the number of operations an algorithm can execute, depending on the input data necessary to generate results.

By comprehending Big O notation, programmers gain insights into an algorithm's efficiency and performance. It guides the optimization process, enabling the utilization of computational resources to their fullest potential.

Therefore, it is crucial for programmers to grasp Big O notation, as it empowers them to design and implement algorithms that can handle increasingly larger datasets while maintaining efficiency.

Gopi Gorantala Newsletter

Join the newsletter to receive the latest updates in your inbox.